The AI-Driven Data Center Boom

Innovations Taming the Power Beast

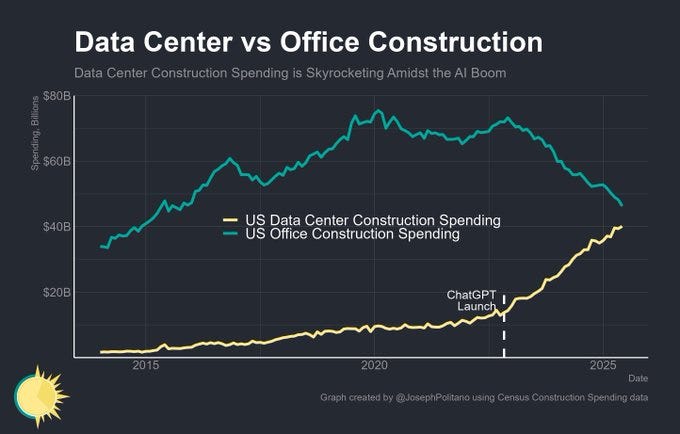

The U.S. construction landscape is undergoing a remarkable transformation. Census data analyzed by Joseph Politano charts a startling reversal: from 2015 to projected 2025, office construction spending has stagnated at $60-80 billion per year, stalling amid a remote work revolution and economic uncertainty. In sharp contrast, data center construction is rocketing upward—from less than $10 billion annually in the early 2020s to projections exceeding $50 billion by 2025. The catalyst? The 2022 launch of ChatGPT, igniting an AI revolution that has redefined infrastructure priorities. Data centers, now the new epicenters of technological and economic growth, are surging just as office buildings fade.

But this boom brings new challenges. Power consumption—already a critical concern—has reached historic highs. As we advance through 2025, understanding and managing these patterns with creative innovations is vital for sustainable progress.

Power Usage Patterns in the AI Era

AI's insatiable energy demands stem from its reliance on specialized hardware like high-end GPUs, which dwarf the power draw of legacy servers. Just one top-tier NVIDIA GPU may consume 700W; scaled across clusters of thousands, the total demand hits gigawatts. For context, training a model like GPT-4 produces carbon emissions equivalent to driving a gasoline car 5-20 miles per million tokens processed.

As AI becomes integral to real-time services, the inference phase—where trained models are deployed—now dominates energy needs. Projections forecast a staggering 122% CAGR in inference power demand through 2028. Globally, data center electricity could double to 945TWh by 2030 (matching Japan’s total annual usage). In the U.S., AI data centers may account for 9% of all grid power by 2030, up from 3-4% today, placing immense strain on already outdated infrastructure and raising blackout risks.

A major culprit: cooling. Keeping AI servers running safely consumes 40% of total energy at many sites. Most data centers measure Power Usage Effectiveness (PUE) above 1.5, meaning half of all site power is lost to overhead (cooling, lighting, distribution). Major cloud providers like Google and Microsoft are spending billions to keep up, especially in places like Virginia and Texas, where demand could jump 81% due to AI. AI’s volatile, peak-heavy workloads only add to the complexity, intensifying stress on local grids.

Environmental impacts are mounting. Without intervention, data centers could emit as much CO2 as the airline industry by 2030. Yet, even as electricity needs soar, the sector is responding with a new wave of efficiency innovations.

Innovations Mitigating the Power Crunch

A surge of innovations is meeting AI’s power challenge head-on, spanning hardware, software, and sustainable energy solutions.

Advanced Cooling Technologies

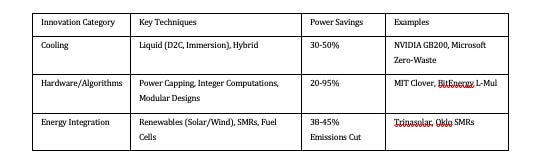

Traditional air cooling simply can’t handle AI’s heat anymore. Liquid cooling has emerged as the new standard—pushing coolant directly to hot components, which slashes energy use by 30-50% versus air alone. This trend may fuel up to 15GW of additional global capacity by 2028. Sophisticated variants include direct-to-chip (D2C) cooling, used by Microsoft in its new zero-waste water cooling systems, and immersion cooling, where servers are submerged in safe dielectric fluids. Immersion cooling not only arrests hotspots but also allows heat to be repurposed for district heating or agriculture.

Cutting-edge hybrid approaches—like Shumate Engineering’s Hybrid Abiatic Fluid Cooler—are also saving up to 97% in water usage and halving energy costs. Meanwhile, real-time, AI-driven management systems (like Ecolab’s 3D TRASAR) monitor and tweak performance, cutting cooling energy needs by 10% and boosting efficiency up to 50%. NVIDIA’s new clusters use onboard capacitors and liquid cooling, dropping peak power by 30%.

The upfront investment is significant and retrofits can be complex, but long-term savings (up to 40% in operating costs) are quickly making these solutions mainstream—paving the way for higher power densities as AI expands.

Efficient Hardware and Algorithms

Beyond cooling, hardware and algorithmic innovations are crucial:

· Power capping reduces GPU draw to 60-80% of max, balancing performance with lower consumption. MIT’s Clover tool can dynamically enable this, saving 20-40% in energy.

· Integer-based computations (e.g., BitEnergy’s L-Mul) are replacing traditional floating-point methods, slashing usage up to 95% for some tasks.

· Smaller, efficient AI models are gaining traction: using specialized architectures to achieve more with less.

· NVIDIA’s 800VDC setups and modular server designs from Cisco/Lenovo and AMD are further optimizing resource allocation and preventing wasteful overprovisioning.

· AI-driven workload management analyzes server loads, optimizing real-time distribution to cut energy use in the cloud by 20%.

· Geographic load balancing and siting data centers in cooler or greener locations can save another 30% on cooling.

These high-tech solutions require sophisticated integration, but they allow industry giants to keep Power Usage Effectiveness (PUE) near optimal levels—approaching 1.1.

Renewable and Alternative Energy Integration

To break the fossil fuel link, data centers are rapidly integrating renewables and new alternatives. Solar and battery hybrids, managed by smart software, are cutting costs by 38% and emissions by nearly half in some e-commerce centers. Trinasolar’s platform, for example, intelligently orchestrates renewables for major hyperscale installations.

Some centers already run on 100% wind and solar. Others are experimenting with small modular nuclear reactors (SMRs) and hydrogen fuel cells for grid independence—ECL’s pilots hit PUEs of 1.1 with no grid reliance. Companies like Oklo and NuScale are breaking ground on nuclear-powered AI hubs. xAI’s Colossus cluster, originally relying on gas turbines, is now being upgraded to 150MW of grid and renewable integrations.

Regulation is catalyzing change: the EU’s Green Data Centre standard will require 100% renewable power by 2032. The main challenge is intermittency—AI-powered forecasting and energy storage are closing the gap.

The Road Ahead

With global data center spending on pace to hit $6.7 trillion by 2030—and over $300 billion earmarked for AI infrastructure in 2025 alone—industry innovations will determine whether this boom is sustainable. Supportive policies, streamlined permitting, and incentives for green tech will speed adoption.

The result? A robust ecosystem where AI’s transformative promise is realized without overburdening the grid or the planet. The data center of the future won’t just power AI—it will redefine what “sustainability at scale” looks like.

What trends are you seeing in your city? Share your thoughts in the comments!