Perceptual AI: How AI Learned to Read Images of Text

DeepSeek-OCR and the Future of Optical Compression

In recent times, out of hundreds of papers that are being published, some warrant such attention that you cannot ignore.

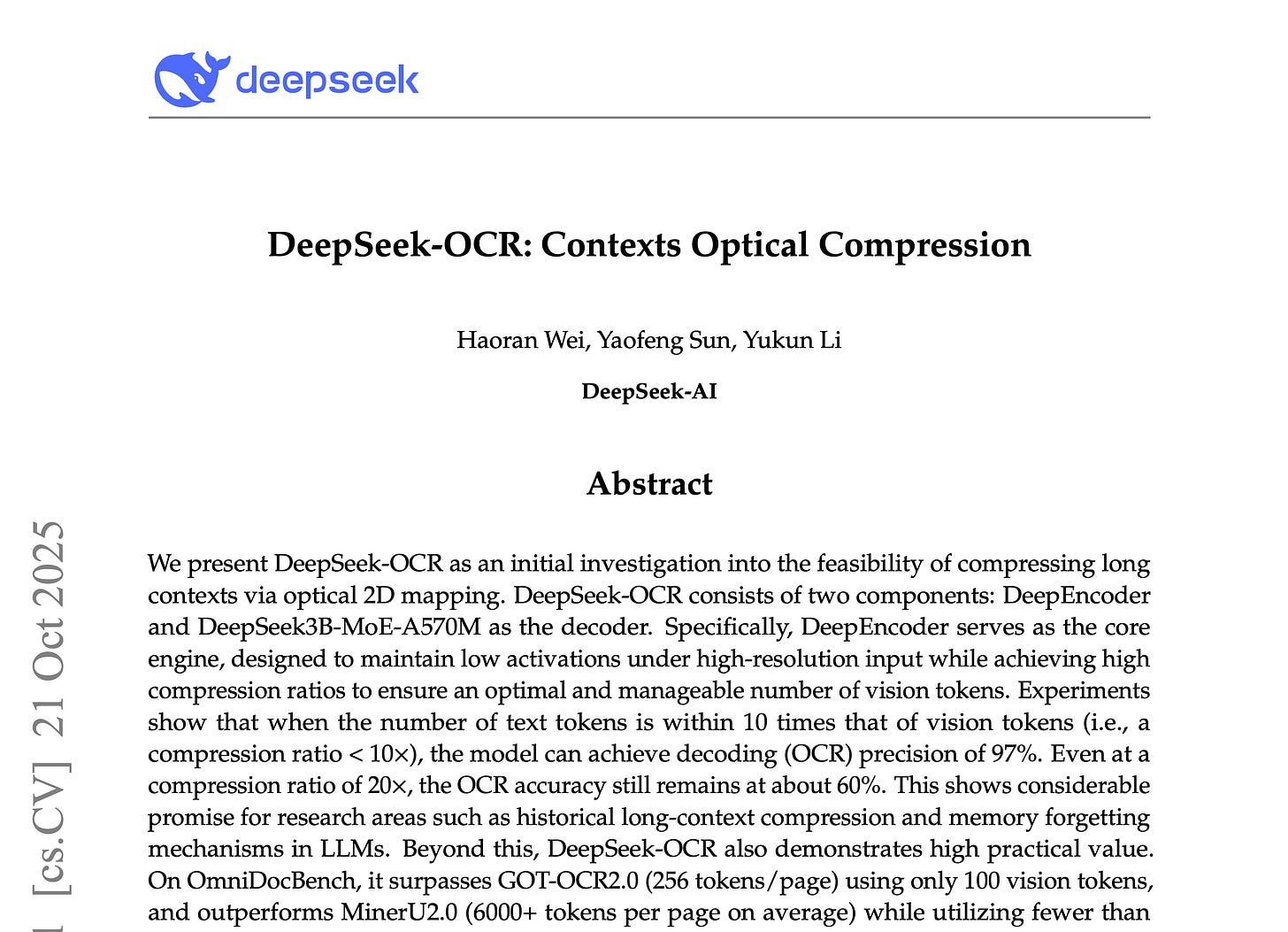

One such paper has been published by Deepseek yesterday, Oct 21 2025. It’s title has OCR (“DeepSeek-OCR: Contexts Optical Compression”), but upon reading I found it much more beyond OCR. Read here

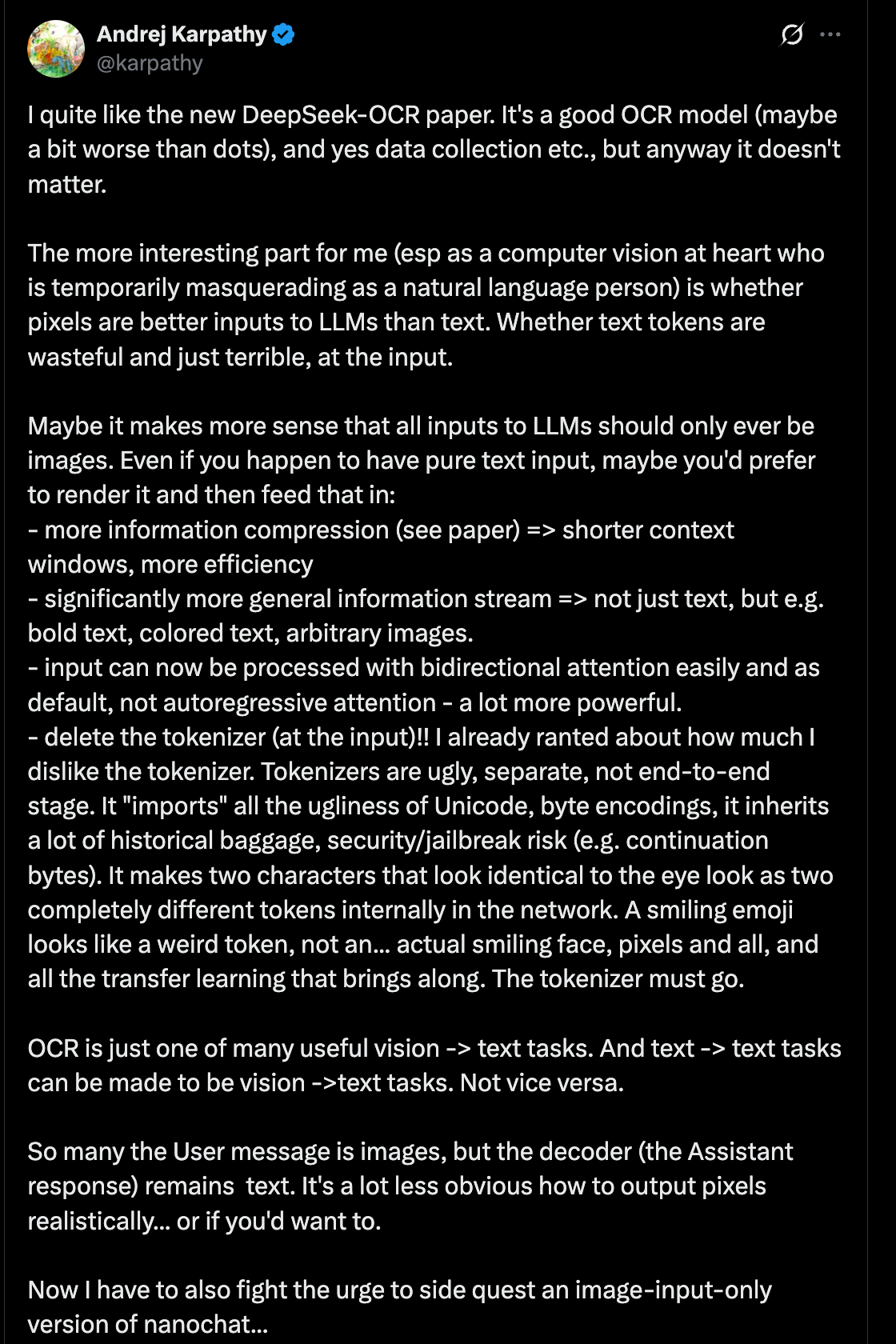

This also garnered Andrej Karpathy’s attention that he tweeted about it highlighting the importance of this approach where we will have all inputs to LLMs are going to be images in future. As much as it is exciting to see this breakthrough (Deepseek folks calls it “experimentation”), there are trade-offs in this process. Look at the outlined at the bottom of this article.

Andrej makes some more suggestions as we can see above to make LLMs better. While we would see LLMs adopting those changes in due course, I would like to sum it up as follows.

“If a picture is worth a thousand words, DeepSeek-OCR proves that one image of text may be worth ten thousand tokens.”

Now, let’s dive-in to understand what this all means.

The Problem with Reading Everything

Large language models (LLMs) are brilliant but inefficient readers. Every word you give them turns into a “token,” a unit of meaning they must process.The longer the document, the more tokens — and the higher the computational cost.

For long documents, the cost grows quadratically.

Double the context length, and the compute can grow fourfold or more.

That’s why even the most advanced LLMs struggle with long PDFs, research papers, or books.

The research team behind DeepSeek-OCR asked a radical question:

What if we stopped giving the AI words altogether — and showed it pictures of the text instead?

From Tokens to Vision: A Paradigm Shift

Text is visual by nature. Humans recognize letters not as strings of code, but as shapes, spacing, and rhythm. DeepSeek-OCR takes that same principle and translates it into computational form.

Core Idea, simplified:

Instead of feeding text directly into a model, DeepSeek-OCR:

Renders text as an image.

Every page becomes a high-resolution visual representation.Compresses that image into “vision tokens.”

A vision encoder captures only the key details — layout, letters, font geometry — while ignoring redundancy.Decodes those tokens back into text.

A specialized language model reads these compact visual cues and reconstructs the full text output.

The process:

Text → Image → Vision Tokens → Reconstructed Text.

Inside the Architecture

1. DeepEncoder — The Optical Compressor

This component functions like an intelligent camera.It scans the text image and extracts its essence into a small set of vision tokens. Each token summarizes a section of the image, reducing thousands of text tokens into hundreds of visual ones — roughly a 10× reduction in size.

2. DeepSeek3B-MoE-A570M — The Vision-Language Decoder

A “mixture-of-experts” model (MoE) serves as the reader.

It interprets the compressed tokens, reconstructs language, and restores punctuation, spacing, and syntax.

Together, these systems create an end-to-end “optical compression” pipeline.

Key metric: At 10× compression, the system achieves about 97 % accuracy.

Even at 20× compression, it maintains around 60 %, proving that meaningful text survives substantial compression.

Why This Matters

Breaking the Context Wall

DeepSeek-OCR redefines how we handle context length. By transforming text into visual form, it avoids the bottleneck that plagues conventional models.

Efficiency: Fewer tokens mean faster inference and lower cost.

Scalability: Enables processing of entire books or archives within feasible compute budgets.

Multimodality: Merges visual and linguistic understanding — a precursor to unified perception models.

This isn’t merely a technical optimization; it’s a new way of thinking about information density.

Practical Implications

1. Document Intelligence

Reading contracts, forms, or legal texts without exceeding context windows.

2. Archival Digitization

Converting scanned manuscripts into compact, searchable digital form.

3. Research and Education

Allowing AI systems to read textbooks, dissertations, or datasets without fragmentation.

4. Accessibility

Enhancing understanding of complex page layouts, mixed languages, and embedded graphics.

The Trade-Offs

Compression always comes at a cost.

Push the ratio too far, and meaning begins to decay.

Beyond 10×, certain nuances — punctuation, alignment, rare characters — are lost.

Additionally, the approach relies on clear, consistent input. Handwriting, skewed scans, or multilingual layouts can still challenge the encoder.

This makes DeepSeek-OCR less a replacement for text-based LLMs and more a strategic companion — ideal when you need to see structure, not just read content.

The Broader Implication: Reading as Perception

DeepSeek-OCR is part of a larger shift toward perceptual AI — systems that interpret the world through multiple sensory channels. Language, vision, and structure are merging into a single reasoning loop.

Where early LLMs parsed words, this new generation perceives context.

It can understand how information looks, not just what it says.

That capability has enormous implications for digital cognition — from adaptive textbooks to reasoning across multimodal data streams.

The Road Ahead

Future work will likely focus on:

Adaptive compression: Dynamically adjusting token density by page complexity.

Cross-modal retrieval: Linking visual cues with semantic memory for instant recall.

Integration with long-context transformers: Combining optical input with direct text for hybrid understanding.

DeepSeek-OCR’s approach may well become foundational to how large systems store, compress, and recall written information at planetary scale.

Closing Reflection

We have spent decades teaching machines to read. Now, we’re teaching them to see what they read.

By converting language into light and geometry, DeepSeek-OCR bridges human and machine perception in a way that feels both inevitable and extraordinary.

Key takeaway: The future of AI literacy won’t depend on longer context windows — it will depend on more intelligent compression.

Fascinating. Who knew LLMs were such slow readrs?